Unlocking Academic Excellence: Using Generative AI to Create Custom Rubrics

Rubrics are more than an evaluation tool; they help set student expectations, increase grading consistency, and promote student independence (Andrade & Du, 2005; Chen et al., 2013; Christie et al., 2015; Timmerman et al., 2011; Johsson, 2014; Panadero & Romero, 2014; Menendez-Varela & Gregori-Giralt, 2016). Well-designed rubrics allow instructors to provide targeted and more objective feedback while also minimizing grading time (Cambell, 2006; Powell, 2001; Reitmeier et al., 2004). While the benefits of rubrics are clear, their creation can often be time-consuming at the front end of assignments. The solution? Use generative AI to create custom rubrics for your courses.

A well-designed rubric outlines clear performance expectations and provides students with targeted feedback. It comprises three key elements: evaluation criteria, a scoring scale, and descriptions of quality for each criterion. It is the third element that makes rubric design so challenging. Criteria identify which features of the task will be assessed and the scoring scale rates performance quality; but it is the descriptors that help students accurately assess their own performance and strategize to improve accordingly.

As an instructor, you can streamline your rubric creation process by combining this information with generative AI such as Microsoft Copilot or ChatGPT. To start, we must design an AI prompt outlining our needs. This prompt should include the assignment or task; the course objectives; the scoring scale; the desired criteria, and instructions for descriptors. Consider the example below, a problem designed to assess students’ understanding of Newton’s Laws of Motion:

Task:

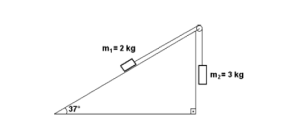

The values of masses m1 and m2 are 2kg and 3kg, respectively, in the system shown in the attached image. The friction coefficient between the inclined plane and mass m1 is 0.5. If the system is released, find the values of acceleration and tension in the string. (sin37 = 0.6, cos37 = 0.8, g = 10m/s2)

Prompt Engineering:

To create an effective prompt, we first need to tell the AI platform what we want it to do. In this case, we want it to design a rubric. We can say:

Create a well-crafted and clear rubric for students in the form of a table using student-friendly language.

Next, we need to include the assignment description by simply copying and pasting the instructions. For tasks that include an image, like our physics example above, have the image available as a separate file to upload into the generative AI platform. If the generative AI platform cannot read or interpret pictures or images, then write a detailed description of the image. At the time this article was published, Copilot was able to interpret images while the free version of ChatGPT (3.5) was not. We can say:

The rubric is for the following student task description: The values of masses m1 and m2 are 2kg and 3kg, respectively, in the system shown in the attached image. The friction coefficient between the inclined plane and mass m1 is 0.5. If the system is released, find the values of acceleration and tension in the string. (sin37 = 0.6, cos37 = 0.8, g = 10m/s2)

The language and terminology used in rubrics should align with course objectives, which means we should also include the course learning objectives in our prompt. For our physics example, we can say:

The rubric should be aligned with the following course learning objectives: Upon successfully completing this course, you will have come to understand the basic principles governing the motion of objects, learned to think more critically/scientifically, and developed the skills needed to attack difficult problems. These are all skills that will serve you strongly in your future courses and careers, even if you never again consider a block sliding down an incline.

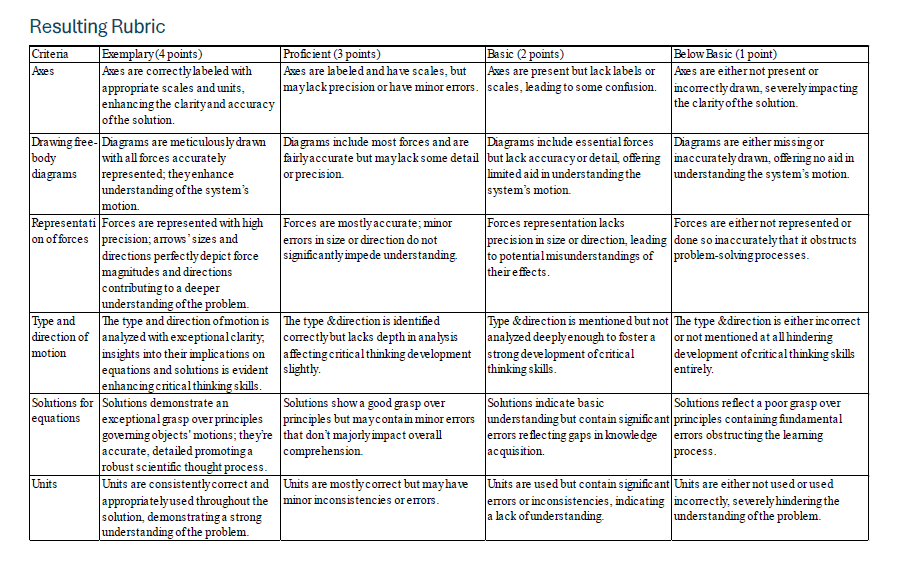

Next, we need to tell the AI platform what type of rubric we would like to create. This includes the three essential parts of a rubric. For our physics example, we can say:

The rubric should contain three parts: Scoring and Scale, Criteria, and Descriptors. Use the following scoring scale for the rubric:

- Exemplary (4 points)

- Proficient (3 points)

- Basic (2 points)

- Beginning (1 point)

Include the following criteria for each element of the scoring scale I just mentioned above:

- Axes

- Drawing free-body diagrams

- Representation of forces

- Type and direction of motion

- Solutions for equations

- Units

Next, we need to provide a clear description of the type of descriptors we need for each criteria. This is often the most difficult and time-intensive part rubric creation, but AI can quickly do this task in student-friendly language. Continuing with our example, we can say:

For each of the criteria and each scoring scale, generate a descriptor that focuses on describing the quality of the work rather than simply the quantity. Emphasize what constitutes exemplary, proficient, basic, and beginning performance in terms of meeting the objectives of the task, rather than just the quantity of work produced. For example, descriptors should highlight the depth of understanding, clarity of communication, accuracy of information, relevance to the topic, adherence to conventions, and effectiveness of practical implications, among other qualitative aspects.

Finally, we need to tell the AI platform what rubric form we would like. The most common form is a table. We can say:

Generate the rubric in the form of a table. The first row heading for the table should include the scoring scale and points. The first column on the left of the table should display the criteria. The descriptors for each component and score should be listed under the correct scoring scale and points column and criteria row. Make the descriptors in the table as specific to the objectives as possible.

When we put all of this together into one prompt, we generated the following rubric.

From here, you can adjust the rubric as needed yourself or adjust your prompt. Instead of spending your time creating a rubric for each assignment, you can use this formula to have AI do the work for you.

This blog post is adapted from CTL faculty Amanda Nolen’s “AI-Powered Rubrics” talk at the 2024 Georgia Tech Symposium for Lifetime Learning. View her presentation slides, examples, and prompt scripts that can be adapted for your own assignments/courses. To learn more about rubrics and assessment criteria, visit CTL’s online resource on the topic.

References:

Andrade, H., & Du, Y. (2005). Knowing what counts and thinking about quality: students report on how they use rubrics. Practical Assessment, Research and Evaluation, 10(4), 57-59.

Chen, H. J., She, J. L., Chou, C. C., Tsai, Y. M., & Chiu, M. H. (2013). Development and application of a scoring rubric for evaluating students’ experimental skills in organic chemistry: An instructional guide for teaching assistants. Journal of chemical education, 90(10), 1296-1302.

Christie, M., Grainger, P. R., Dahlgren, R., Call, K., Heck, D., & Simon, S. E. (2015). Improving the quality of assessment grading tools in master of education courses: A comparative case study in the scholarship of teaching and learning. Journal of the Scholarship of Teaching and Learning, 15(5), 22-35.

Howell, R. J. (2014). Grading rubrics: Hoopla or help?. Innovations in education and teaching international, 51(4), 400-410.

Jonsson, A. (2014). Rubrics as a way of providing transparency in assessment. Assessment & Evaluation in Higher Education, 39(7), 840-852.

Menéndez-Varela, J. L., & Gregori-Giralt, E. (2018). Rubrics for developing students’ professional judgement: A study of sustainable assessment in arts education. Studies in Educational Evaluation, 58, 70-79.

Panadero, E., & Romero, M. (2014). To rubric or not to rubric? The effects of self-assessment on self-regulation, performance and self-efficacy. Assessment in Education: Principles, Policy & Practice, 21(2), 133-148.

Powell, T. A. (2002). Improving assessment and evaluation methods in film and television production courses. Capella University.

Reitmeier, C. A., Svendsen, L. K., & Vrchota, D. A. (2004). Improving oral communication skills of students in food science courses. Journal of Food Science Education, 3(2), 15-20.

Timmerman, B. E. C., Strickland, D. C., Johnson, R. L., & Payne, J. R. (2011). Development of a ‘universal’ rubric for assessing undergraduates’ scientific reasoning skills using scientific writing. Assessment & Evaluation in Higher Education, 36(5), 509-547.